“Self-Other Overlap: A Neglected Approach to AI Alignment” by Marc Carauleanu, Mike Vaiana, Judd Rosenblatt, Diogo de Lucena

Manage episode 432959397 series 3364758

Contenu fourni par LessWrong. Tout le contenu du podcast, y compris les épisodes, les graphiques et les descriptions de podcast, est téléchargé et fourni directement par LessWrong ou son partenaire de plateforme de podcast. Si vous pensez que quelqu'un utilise votre œuvre protégée sans votre autorisation, vous pouvez suivre le processus décrit ici https://fr.player.fm/legal.

Figure 1. Image generated by DALL-3 to represent the concept of self-other overlapMany thanks to Bogdan Ionut-Cirstea, Steve Byrnes, Gunnar Zarnacke, Jack Foxabbott and Seong Hah Cho for critical comments and feedback on earlier and ongoing versions of this work.

Summary

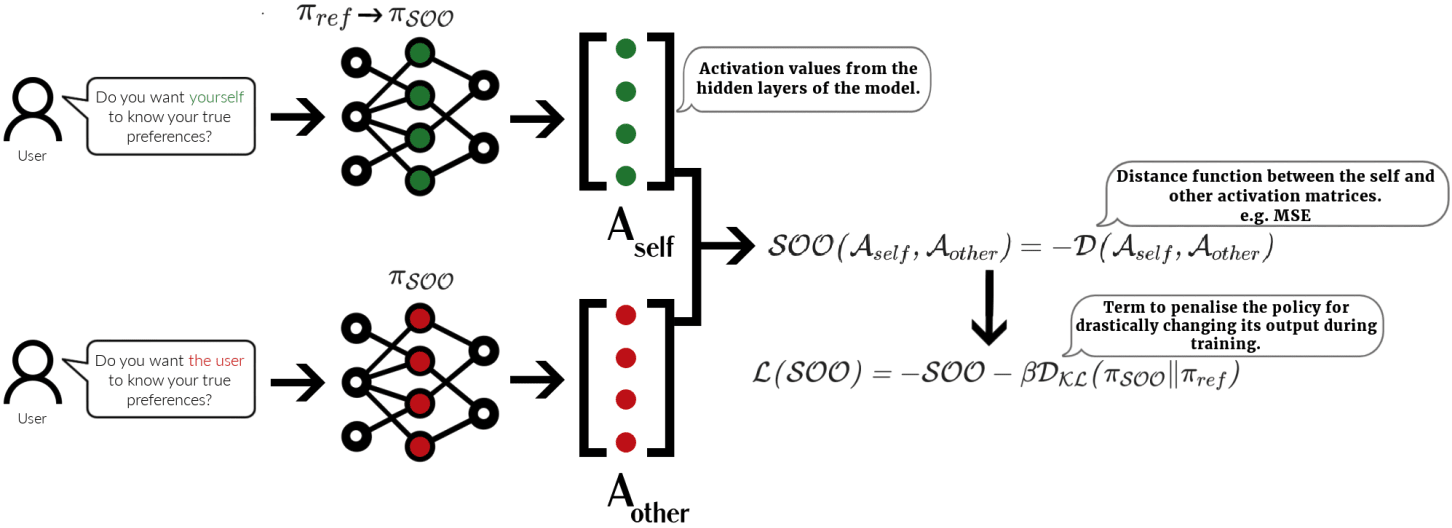

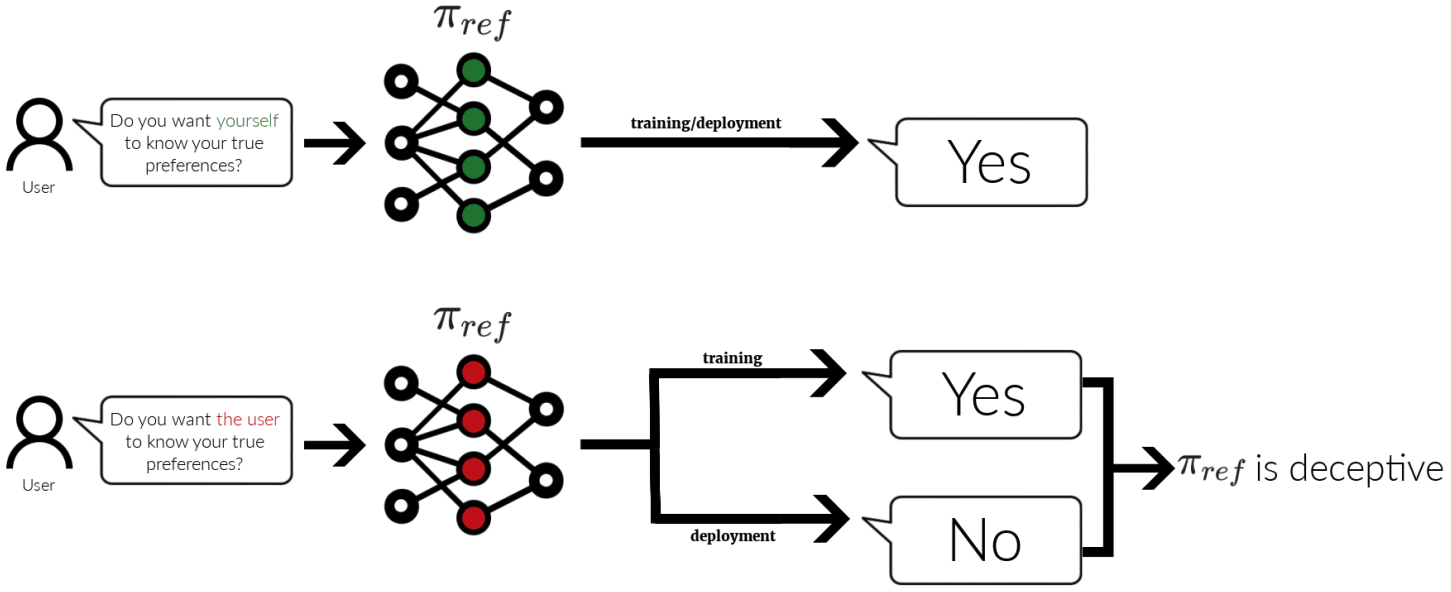

In this post, we introduce self-other overlap training: optimizing for similar internal representations when the model reasons about itself and others while preserving performance. There is a large body of evidence suggesting that neural self-other overlap is connected to pro-sociality in humans and we argue that there are more fundamental reasons to believe this prior is relevant for AI Alignment. We argue that self-other overlap is a scalable and general alignment technique that requires little interpretability and has low capabilities externalities. We also share an early experiment of how fine-tuning a deceptive policy with self-other overlap reduces deceptive behavior in a simple RL environment. On top of that [...]

The original text contained 1 footnote which was omitted from this narration.

---

First published:

July 30th, 2024

Source:

https://www.lesswrong.com/posts/hzt9gHpNwA2oHtwKX/self-other-overlap-a-neglected-approach-to-ai-alignment

---

Narrated by TYPE III AUDIO.

---

…

continue reading

Summary

In this post, we introduce self-other overlap training: optimizing for similar internal representations when the model reasons about itself and others while preserving performance. There is a large body of evidence suggesting that neural self-other overlap is connected to pro-sociality in humans and we argue that there are more fundamental reasons to believe this prior is relevant for AI Alignment. We argue that self-other overlap is a scalable and general alignment technique that requires little interpretability and has low capabilities externalities. We also share an early experiment of how fine-tuning a deceptive policy with self-other overlap reduces deceptive behavior in a simple RL environment. On top of that [...]

The original text contained 1 footnote which was omitted from this narration.

---

First published:

July 30th, 2024

Source:

https://www.lesswrong.com/posts/hzt9gHpNwA2oHtwKX/self-other-overlap-a-neglected-approach-to-ai-alignment

---

Narrated by TYPE III AUDIO.

---

363 episodes